Semantic Audio Tools for Radio Production

Chris Baume / PhD thesis / April 2018

Abstract

Radio production is a creative pursuit that uses sound to inform, educate and entertain an audience. Radio producers use audio editing tools to visually select, re-arrange and assemble sound recordings into programmes. However, current tools represent audio using waveform visualizations that display limited information about the sound.

Semantic audio analysis can be used to extract useful information from audio recordings, including when people are speaking and what they are saying. This thesis investigates how such information can be applied to create semantic audio tools that improve the radio production process.

An initial ethnographic study of radio production at the BBC reveals that producers use textual representations and paper transcripts to interact with audio, and waveforms to edit programmes. Based on these findings, three methods for improving radio production are developed and evaluated, which form the primary contribution of this thesis.

Audio visualizations can be enhanced by mapping semantic audio features to colour, but this approach had not been formally tested. We show that with an enhanced audio waveform, a typical radio production task can be completed faster, with less effort and with greater accuracy than a normal waveform.

Speech recordings can be represented and edited using transcripts, but this approach had not been formally evaluated for radio production. By developing and testing a semantic speech editor, we show that automatically-generated transcripts can be used to semantically edit speech in a professional radio production context, and identify requirements for annotation, collaboration, portability and listening.

Finally, we present a novel approach for editing audio on paper that combines semantic speech editing with a digital pen interface. Through a user study with radio producers, we compare the relative benefits of semantic speech editing using paper and screen interfaces. We find that paper is better for simple edits of familiar audio with accurate transcripts.

Statement of originality

This thesis and the work to which it refers are the results of my own efforts. Any ideas, data, images or text resulting from the work of others (whether published or unpublished) are fully identified as such within the work and attributed to their originator in the text, bibliography or in footnotes. This thesis has not been submitted in whole or in part for any other academic degree or professional qualification. I agree that the University has the right to submit my work to the plagiarism detection service TurnitinUK for originality checks. Whether or not drafts have been so-assessed, the University reserves the right to require an electronic version of the final document (as submitted) for assessment as above.

Acknowledgements

My foremost thanks go to my supervisor, Prof. Mark Plumbley, for his enthusiasm, encouragement, wisdom and guidance over the years. Huge thanks also to my co-supervisors: Dr. Nick Bryan-Kinns, Dr. Janko Ćalić and Prof. David Frohlich, for their invaluable suggestions, feedback and advice, and for helping me navigate an unfamiliar field of research.

I have been extremely privileged to have been able to undertake this research as part of my employment at the BBC. My heartfelt thanks go out to Prof. Graham Thomas, Dr. Frank Melchior, Chris Pike and Samantha Chadwick for giving me this rare opportunity, and for their steadfast support throughout the process. Many thanks to my colleagues in the BBC R&D Audio Team for their patience, support and help with proofreading, but also for being brilliant people to work with.

This work was only made possible through the involvement of colleagues from BBC Radio, who volunteered their time despite their demanding workload. My sincere thanks go to all of the radio producers who took the time to participate in the user studies and contribute to the design of the interfaces. Thanks also to Deborah Cohen, Hugh Levinson and John Goudie for allowing me access to their teams, and to Jonathan Glover his advocacy and encouragement.

Thanks to Liam Bowes, Carl Garner and Richard Sargeant from Anoto for their support in the development of the digital pen interface, and to Matt Haynes and Matt Shotton from BBC R&D, whose software formed part of the semantic speech editor.

Finally, I’d personally like to thank my wife, Nancy, for her love, encouragement, patience and belief in me throughout this process. I couldn’t have done it without you.

The work in this thesis was fully funded by the British Broadcasting Corporation as part of the BBC Audio Research Partnership.

Licence

This work is copyright 2018 Chris Baume, and is licensed under the Creative Commons Attribution 4.0 International Licence. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ or send a letter to Creative Commons, PO Box 1866, Mountain View, CA 94042, USA.

List of Figures

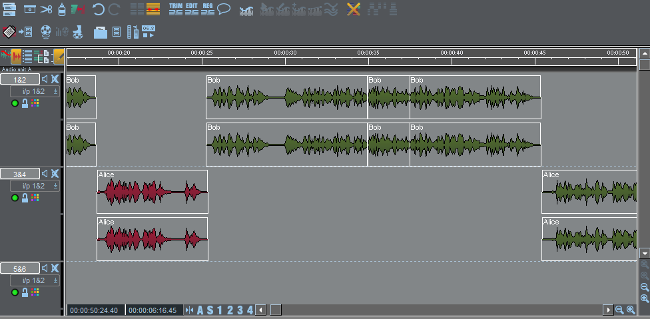

2.1.1.1 User interface of the SADiE digital audio workstation from Prism Sound, which is used at the BBC for radio production.

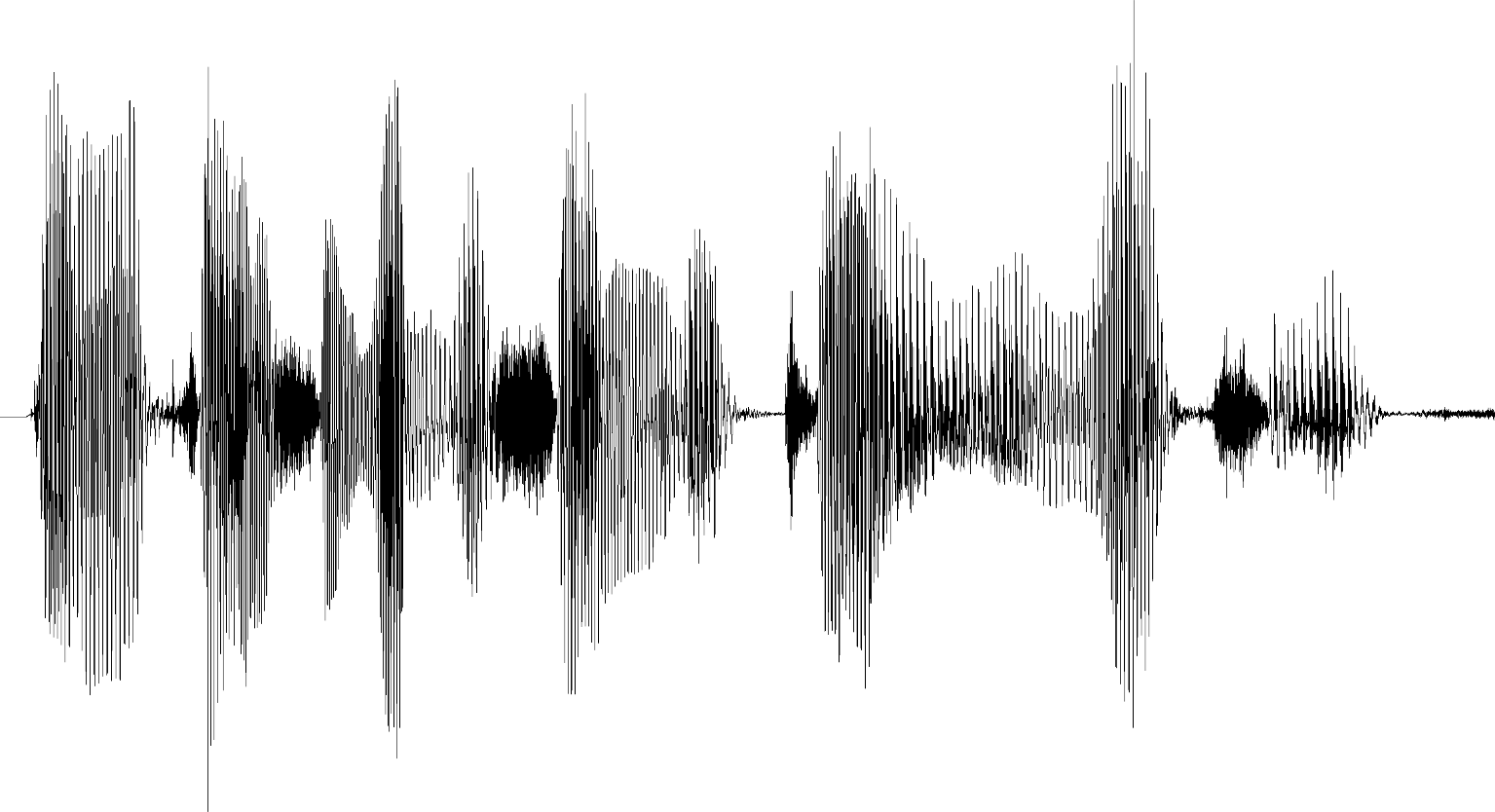

2.1.2.1.1 Example audio waveforms of speech, demonstrating the effect of zoom on the visibility of frequency information.

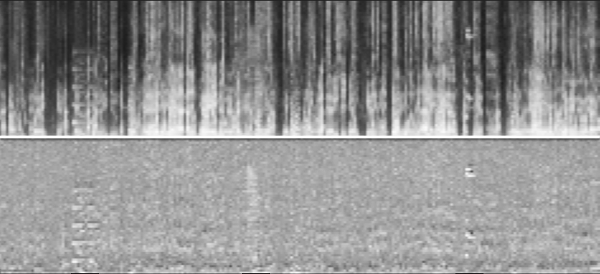

2.1.2.2.1 An example audio spectrogram of speech.

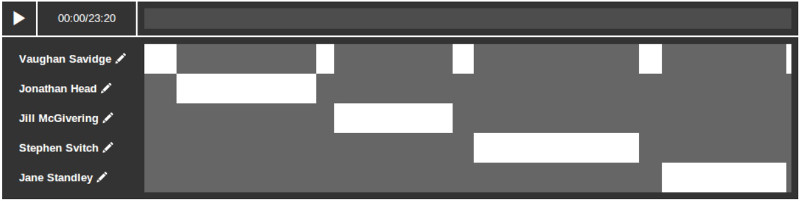

2.2.2.2.1 Speaker diarization and recognition interface in the BBC World Service Archive prototype, from Raimond et al. (2014)

2.2.2.3.1 Example ASR transcript.

2.3.1.1 Demonstration of the “bouba/kiki effect” — an example of crossmodal perception.

2.3.2.1.1 Lens view for magnifying an audio waveform at the current playhead position, from Gohlke et al. (2010). Republished with permission.

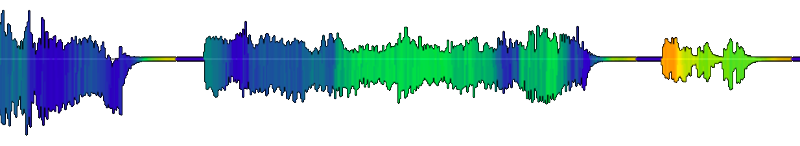

2.3.2.2.1 An audio waveform colourised by using pseudocolour to map the spectral centroid of the audio to a rainbow colour gradient.

2.3.2.2.2 False colour audio visualization of an episode of the BBC radio programme “From Our Own Correspondent”, from (Mason, Evans, and Sheikh 2007).

2.3.2.2.3 Comparison of a normal spectrogram (top) and a saliency-maximised spectrogram (bottom), from Lin et al. (2013). Republished with permission.

2.4.3.1 User interface of a semantic speech editor for creating “audio stories”, from Rubin et al. (2013). Republished with permission.

2.4.4.1 User interface of Hyperaudio Pad — a semantic speech editor for video, from Boas (2011). Drag-and-drop is used to select clips from the left transcript and arrange them on the right transcript.

3.2.1.2.1 The newsroom in BBC New Broadcasting House. Image source: BBC.

3.2.1.2.2 Physical layout of Radio Summaries in the BBC newsroom.

3.2.1.2.3 Desk of the Radio Summaries Assistant Editor. The switching interface controls the output of the monitor and headphones.

3.2.1.2.4 User interface for Electronic News Production System (ENPS). The Media Object Server (MOS) plugin is used to find and edit audio clips in the dira! radio production system.

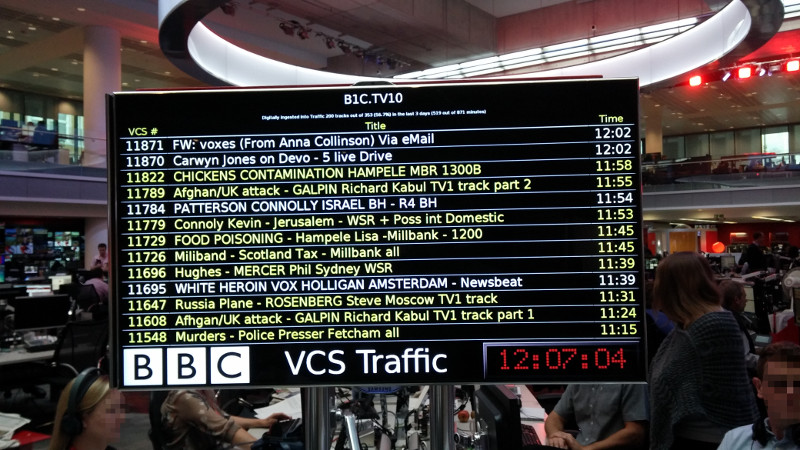

3.2.1.2.5 Arrivals board in BBC newsroom, showing the ID number, name and arrival time of each audio clip. “VCS” is the colloquial name for the dira! radio production system, as it is made by a company that used to be called VCS.

3.2.1.2.6 Operational sequence diagram of news summaries production, partitioned by role and location.

3.2.2.2.1 Physical layout of the drama studio and cubicle.

3.2.2.2.2 Cubicle of studio 60A, showing the view of the Panel SM into the studio.

3.2.2.2.3 Radio drama edit suite.

3.2.2.3.1 Operational sequence diagram of radio drama recording, partitioned by role and location.

3.2.2.3.2 An annotated drama script page.

3.2.2.3.3 Operational sequence diagram of radio drama editing, partitioned by role and location.

3.2.3.3.1 Operational sequence diagram of radio documentary production, partitioned by role and location.

4.1.3.1 Screenshot of the user interface used to display the audio visualizations, complete the segmentation task and measure the user’s performance.

4.1.3.2 The audio visualization conditions that were evaluated.

4.2.1 Rejected responses by audio clip.

4.2.1.1 \(p\)-values of pairwise comparisons for the performance metrics. Statistically significant differences are shaded.

4.2.2 Participant demographics.

4.2.2.1 Mean task load index values with 95% confidence intervals. Lower values represent better performance.

4.2.2.2 Condition preferences of participants.

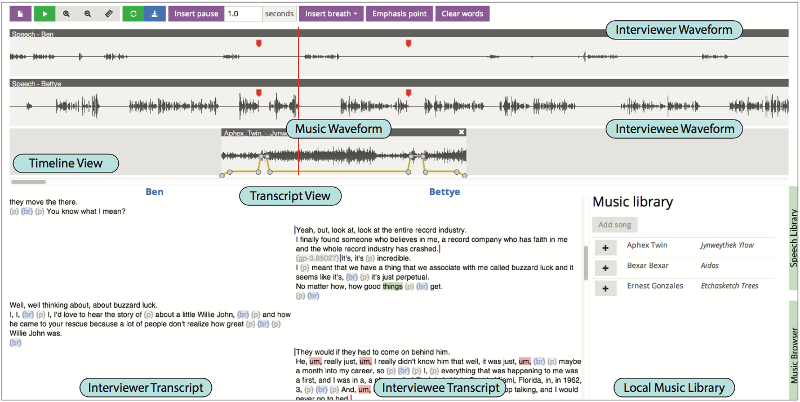

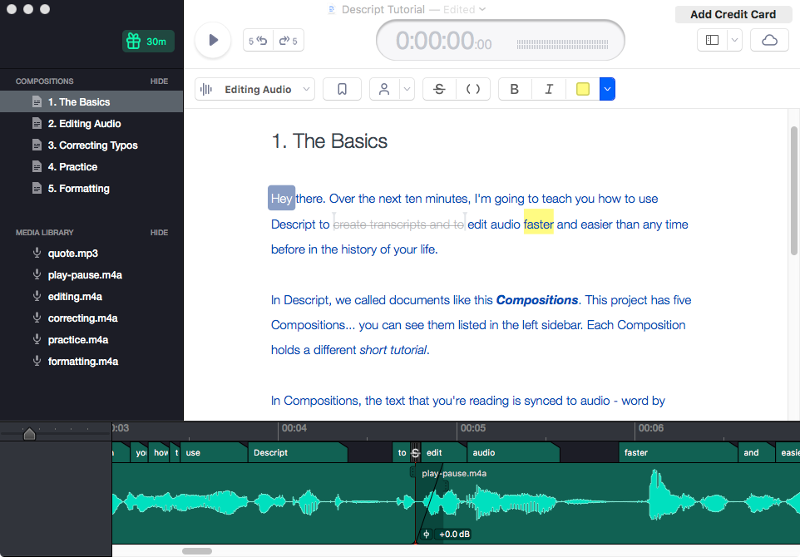

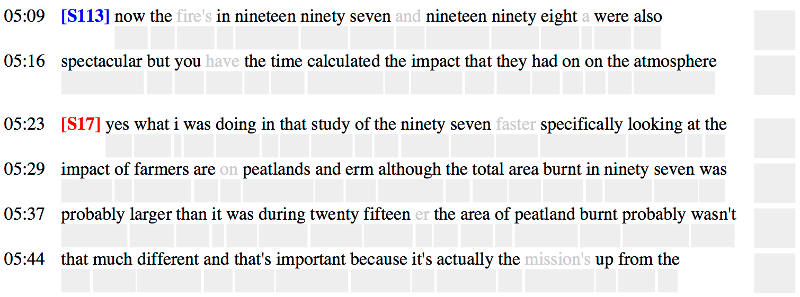

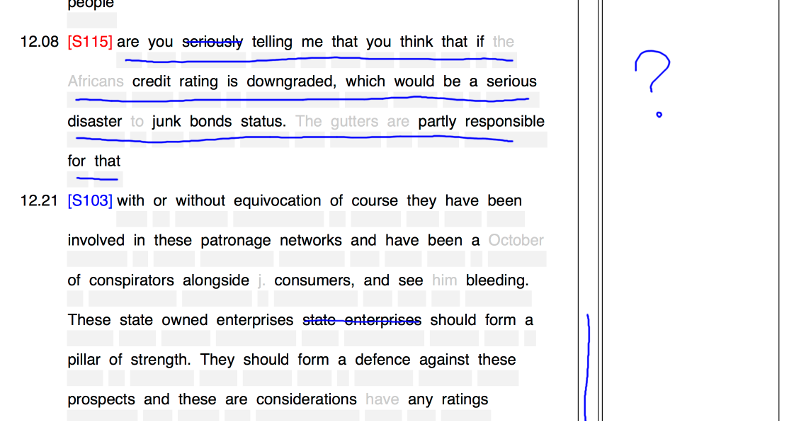

5.2.5.1 User interface of Dialogger, with highlighted features.

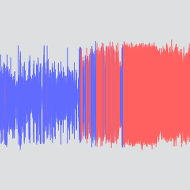

5.4.2.3.1 Printed transcript that has been highlighted by P2.

5.4.3.1.1 Time taken to complete the task for each condition, compared to the original audio length.

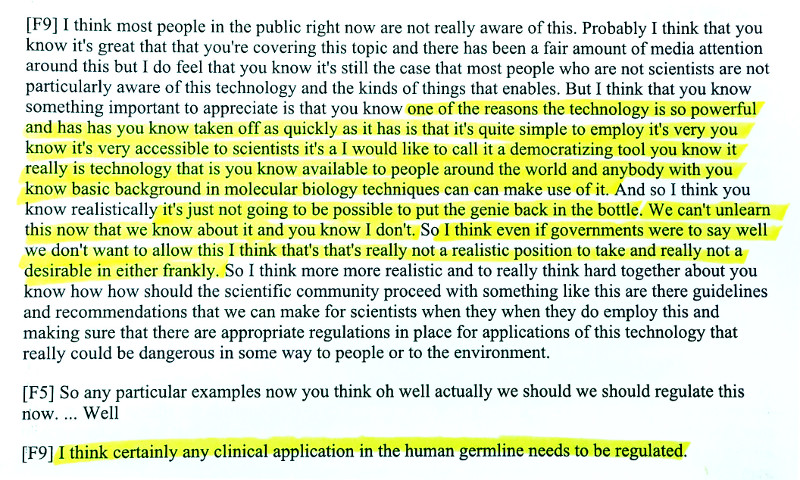

5.5.6.1 User interface of the Descript semantic speech editor, a commercial semantic speech editing system developed independently of our research.

6.2.1.1 Design of our paper mock-up.

6.3.1.1 Layout of the paper interface.

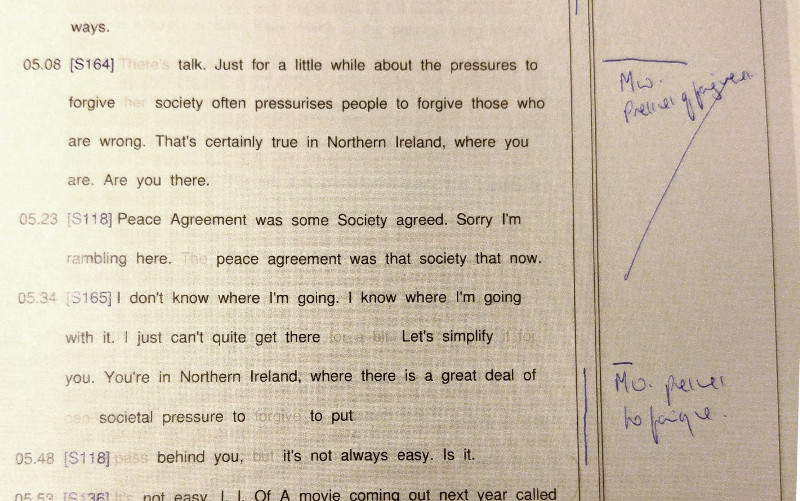

6.3.1.2 Example of the paper interface system, with freehand annotations that demonstrate its use.

6.3.1.3 Flow diagram of PaperClip, showing the integration between the paper and screen interfaces, flowing from left to right.

6.3.2.1 User interface of the screen-based semantic speech editor.

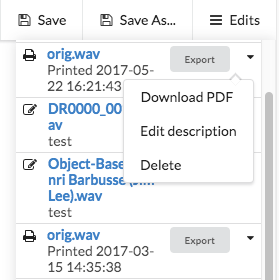

6.3.2.2 Close-up of the edits sidebar of the screen-based semantic speech editor, showing a button to export audio, and a dropdown menu with the option to download a PDF.

6.5.1.1 Mean average scores for usefulness and usability. There is no statistically significant difference between the scores.

6.5.2.5.1 Annotations made on paper in the margin by P5. The content is segmented using horizontal lines and labels in the margin. The middle segment is marked as not needed using a diagonal line.

A.1.1.1 Flow diagram of the Dialogger system. Excluded components are shaded.

A.2.1.1 Conceptual diagram of the Vampeyer visualization framework

A.3.1 Example user interface showing BeatMap in use.

List of Tables

2.3.1.1 Audio-visual mappings supported by strong evidence, from Spence (2011).

4.1.5.1 Descriptions of the radio programmes used as the source of the audio clips.

4.2.1.1 \(p\)-values of pairwise comparisons for the performance metrics. Statistically significant differences are shaded.

4.2.2.1 \(p\)-values of pairwise comparisons for the perceptual metrics. Statistically significant differences are shaded.

4.3.1 Summary of confirmed findings for each hypothesis, with \(p<.05\).

5.4.1 Topics, categories and codes that emerged from analysis of the interviews in Stage 4 and the observation notes from Stages 1, 2 and 3.

6.2.3.1 Natural gestures used by each participant to edit their transcripts.

6.4.1 Evaluation study participant demographics.

6.5.1 Themes, categories and number of codes that resulted from the quantitative analysis of the interviews and observations.

List of abbreviations

| ANOVA | Analysis of Variance |

| AP | Associated Press |

| ASR | Automatic Speech Recognition |

| BBC | British Broadcasting Corporation |

| DAW | Digital Audio Workstation |

| EDL | Edit Decision List |

| ENPS | Electronic News Production System |

| HSD | Honest Significant Difference |

| HSV | Hue Saturation Value |

| IP | Internet Protocol |

| ISDN | Integrated Services Digital Network |

| LER | Low Energy Ratio |

| LSD | Least Significant Difference |

| MFCC | Mel-Frequency Cepstral Coefficient |

| MOS | Media Object Server |

| NPR | National Public Radio |

| RDC | Radio Drama Company |

| RGB | Red Green Blue |

| RMS | Root Mean Square |

| ROT | Recording of Transmission |

| RQDA | R package for Qualitative Data Analysis |

| SM | Studio Manager |

| SMD | Speech Music Discrimination |

| SPSS | Statistical Package for the Social Sciences |

| SUS | Software Usability Scale |

| TLX | Task Load Index |

| WAV | Waveform Audio File Format |

| WER | Word Error Rate |

| ZCR | Zero Crossing Rate |

Ethics

Chapter 3 received ethics approval from the Queen Mary University of London Ethics of Research Committee, under the reference

QMERC1386b.Chapter 4 received ethics approval from the Queen Mary University of London Ethics of Research Committee, under the reference

QMREC1348d.Chapter 5 received a favourable ethical opinion from the University of Surrey Ethics Committee, under the reference

UEC/2015/054/FEPS.Chapter 6 received a favourable ethical opinion from the University of Surrey Ethics Committee, under the reference

UEC/2015/116/FEPS.

A note on writing style

Throughout this thesis, the term “we” is used to refer to the author’s own work. This acknowledges that that the work has been influenced by discussions with supervisors and colleagues, and follows recent trends in academic writing style. At times when a statement represents the sole opinion of the author, or when reader is invited to think alongside, or perhaps disagree with, the author, phrases such as “the author believes” are deliberately used.

1 Introduction

Radio broadcasting is the use of radio waves to transmit sound to a large audience. The first regular radio broadcasts in the UK began in 1922 when a consortium of radio manufacturers formed the BBC (BBC 2015). Almost a century later, radio is still one of the mass media, with 90% of the UK adult population listening to the radio each week for an average of 21 hours (RAJAR 2017). In the UK alone, there are 50 national, 329 local and 251 community radio stations (Office of Communications 2017, pp. 6-127).

Traditionally, radio has been consumed over the airwaves, but the Internet has changed the way audio content is distributed and consumed. On-demand radio allows the audience to listen to a radio programme whenever they like, and podcasting allows audio content to be downloaded as a digital file. Over 200,000 podcasts are available through iTunes (Morgan 2015) and approximately 10% of the UK adult population regularly listen to podcasts (RAJAR and IpsosMori 2017). The distinction between radio content and podcasts is beginning to blur as broadcasters are repurposing some of their speech-based radio output as podcasts (Office of Communications 2017, p. 98).

The British Broadcasting Corporation (BBC) has the largest share of radio listening, and is the most popular source of podcasts, in the UK (Office of Communications 2017, p. 107). The research presented in this thesis was funded by the BBC and conducted during, and as part of, the author’s employment at BBC Research and Development. BBC R&D promotes technological innovation that supports the BBC’s mission to enrich people’s lives with programmes and services that inform, educate and entertain (BBC Charter 2016 art. 15). This is achieved through the research and development of broadcast technology, including for the production and distribution of audio content.

1.1 Motivation

One of the distinguishing characteristics of radio is that it is based exclusively on sound. Although listeners have no visual reference, sound stimulates the imagination and creates pictures in the mind’s eye. Radio is not limited by the size of the screen in the way that television is. Sound design and music can be used to produce scenes for virtually any scenario, which may otherwise be impossible or too expensive to put on screen. As the old adage goes “the pictures are so much better on the radio”.

Humans use sound to communicate through language and music, which can richly convey complex ideas and elicit powerful emotion. Despite this, sound is simply the result of vibration in a medium such as air. As sound is based on vibration, it cannot be “frozen” — it can only exist over a period of time. The temporal nature of sound gives it unique properties that make it both a fascinating and challenging medium to work with.

Unlike pictures, which can be viewed and searched at a glance, sound recordings must be perceived through listening. The time needed to naturally listen to a sound recording is the same as the length of the recording. Reviewing long recordings can therefore take a large amount of time. Sound is also a linear medium that must be played in sequence, which can make it challenging to navigate sound recordings non-linearly.

Radio production is a process of recording, selecting and re-arranging audio content, so it is desirable to be able to efficiently interact with audio. Modern radio production is performed on a computer screen using a digital audio workstation (DAW). DAWs visualize audio by plotting the amplitude of the audio signal over time, known as an audio waveform. Waveforms allow users to interact with the audio spatially rather than temporally, which is thought to be a faster and easier way to navigate audio recordings. Waveforms display some useful information, but are limited in the information they can convey. For example, when viewing a waveform at the right scale, it is often possible for an experienced user to distinguish between speech and music, but it is not usually possible to determine the style of the music, or what is being said.

Semantic audio analysis is the task of deriving meaning from audio. This is achieved by extracting audio features that describe the sound, and mapping these to a human-readable representation, such as categories or words. This research was partly inspired by a conference presentation from Loviscach (2013), who demonstrated several prototypes that used semantic audio analysis to assist the editing of recorded speech. These included visualizing vowels using colour, detecting and highlighting “umm”s, and identifying repetition. These prototypes were developed to assist the navigation and editing of lecture recordings using custom video editing software (J. Loviscach 2011a).

Applying semantic audio analysis or better visualisation techniques to radio production tasks may allow us to produce richer user interfaces that make it easier and faster for producers to create their programmes. We are interested in discovering whether this approach could be used to improve the radio production process, and which techniques work best. As part of this research, we want to understand how these techniques can be applied to the production of radio to make the process more efficient, such as by reducing the time or effort that is needed to produce the programme.

Making radio production more efficient may free up resources that could be spent on producing higher quality content, or used to making financial savings. The BBC spent £471M on radio production in 2016/17 (Office of Communications 2017, p. 111), so even minor improvements to production workflows could result in large savings. We are also interested in making radio production a more enjoyable and creative experience, where producers spend less time on boring, menial tasks and more time on activities that contribute to the quality of the programme output.

Radio production has not been subject to much previous academic research. The author’s position within the BBC gives us extraordinary access to production staff and working environments that would otherwise be inaccessible to most researchers. We view this as a rare opportunity to conduct research that directly involves professional radio producers and takes place within a genuine work environment.

1.2 Aim and scope

The aim of this work is to improve radio production by developing and evaluating methods for interacting with, and manipulating, recorded audio. Our ambition is to apply these methods to make radio production more efficient or to open up new creative possibilities. In Sections 2.6 and 3.5, we formulate the specific research questions that are answered in this thesis.

Most radio is broadcast live, where the audio production happens in real-time, but in these cases there is little opportunity to make the audio production more efficient. For this reason, we have chose to focus only on the production of recorded audio.

Although most radio listeners in the UK tune in to music-based stations, 38% of the population listen to speech-based radio (Office of Communications 2017, pp. 97-105) and 10% listen to podcasts (RAJAR and IpsosMori 2017), which are normally speech-based. Most original radio content is speech-based, so we will focus our research on the production of speech content.

We want to make the most of our access to professional radio producers and work environments. To do this, we will adopt radio producers as our target user group, by involving them in the development and evaluation of our work, and conduct evaluations in the workplace.

Finally, the intention behind this research is to facilitate creative expression, rather than replace it through automation. Our ambition is to find ways for machines and humans to work to each of their strengths, where simple or menial tasks are automated, but there is always a “human in the loop” that makes the decisions. Our hope is that, in addition to making production activities more efficient, this may unlock opportunities for greater creative expression.

1.3 Thesis structure

Chapter 2 introduces previous work that we will build upon in this thesis. We start by giving a general overview of audio editing and semantic audio analysis to provide context to our research. We then survey related techniques and previous systems that have attempted to assist the navigation and editing of audio. These are categorised into audio visualization, semantic speech interfaces and audio playback interfaces. We then reflect upon the literature and our research aim to formulate our research questions.

Chapter 3 investigates existing audio editing workflows in radio production. Our goal is to help inform the direction of our research by gaining a better understanding of the roles, environment, tools, tasks and challenges involved in real-life radio production. We achieve this by conducting three ethnographic case studies of news, drama and documentary production at the BBC, the results of which present three avenues of research. We conclude by reflecting on the results and previous work to form an intervention strategy for answering our research questions.

Chapter 4 evaluates the effect of audio visualization on radio production. Semantic audio analysis techniques have previously been used to enhance visualizations to assist the navigation of audio recordings. However, the effect of this approach on user performance has not been tested. We conduct a user study that quantitatively measures the performance of three audio visualization techniques for a typical radio production task.

Chapter 5 investigates semantic speech editing in the context of real-life radio production. We design and develop Dialogger — a semantic speech editor that integrates with the BBC’s radio production systems. We then describe the results of our qualitative user study of BBC radio producers, who used our editor in the workplace to produce radio programmes for broadcast. We directly compare semantic editing to the current production workflow, and gain insights into the benefits and limitations of this approach.

Chapter 6 investigates the role of paper as a medium for semantic speech editing. Our findings in Chapters 3 and 5 led us to to develop PaperClip — a novel system for editing speech recordings on paper, using a digital pen interface. We describe how we worked with radio producers to refine our prototype, then evaluate our system through a qualitative study of BBC radio producers in the workplace. We directly compare PaperClip and Dialogger to explore the relative benefits of paper and screen interfaces for semantic speech editing.

Chapter 7 concludes the thesis and considers prospects for further research.

1.4 Contributions

The principal contributions of this thesis are:

Chapter 3: The first formal observational study of radio production workflows. A set of novel theoretical models of audio editing workflows that contribute to the academic understanding of professional radio production.

Chapter 4: The first formal study on the effect of audio waveforms and semantic audio visualization on user performance.

Chapter 5: The first application of semantic speech editing to professional radio production. The first formal user study of semantic speech editing for audio production. Insights into the performance, challenges and limitations of semantic speech editing in the context of radio production.

Chapter 6: A novel approach to editing speech recordings on paper through the combination of semantic speech editing and a digital pen interface, and the first evaluation of this approach. Insights into the relative benefits of paper and screen interfaces for semantic speech editing.

1.5 Associated publications

Portions of the work detailed in this thesis have been presented in the following publications:

Chapter 3: Chris Baume, Mark D. Plumbley, and Janko Ćalić (2015). “Use of audio editors in radio production”. In Proceedings of the 138th Audio Engineering Society Convention.

Chapter 5: Chris Baume, Mark D. Plumbley, Janko Ćalić, and David Frohlich (2018). “A Contextual Study of Semantic Speech Editing in Radio Production”. In International Journal of Human-Computer Studies 115, pp. 67–80.

Chapter 6: Chris Baume, Mark D. Plumbley, David Frohlich, and Janko Ćalić (2018). “PaperClip: A Digital Pen Interface for Semantic Speech Editing in Radio Production”. In Journal of the Audio Engineering Society, 66.4.

Software

As part of this research, we have also developed and released the following systems as open-source software:

2 Background

The focus of this thesis is on the production of audio content for radio broadcast. Radio production is both a technical and creative endeavour that combines complex audio technology with artistic taste and judgement (Barbour 2004). The aim of radio production is to “manipulate sound to create an effect or deliver a message”, which is achieved by combining various sources of sound into a programme (Hausman et al. 2012, pp. 12-20). In this chapter, we review methods, systems and technologies that are related to the production of radio, and to the development of the semantic audio production tools in this thesis.

In Section 2.1, we start by giving a brief overview of the methods and tools of audio editing, which is used to create radio programmes. We show how current editing tools use visual representations to interact with audio, and discuss the limitations of these visualizations. In Section 2.2, we show how semantic audio analysis can be used to extract information from audio content to describe the sound. We then consider previous research that has used this semantic data to improve the navigation and editing of audio through the use of audio visualization (Section 2.3), transcripts of speech (Section 2.4), and audio playback interfaces (Section 2.5). Finally, in Section 2.6, we reflect upon the literature and our research aim to formulate the research questions that we will attempt to answer in this thesis.

2.1 Audio editing

The focus of this thesis is on the production of radio programmes using recorded audio. Recording sound ahead of broadcast brings with it a number of benefits (Hausman et al. 2012, p. 133). Programmes can be much more complex, as many more sound elements can be brought together than would be possible in a live scenario. The producer is able to record re-takes of the same material until they are satisfied, which allows them greater freedom to experiment and fix any mistakes that occurred. The ability to re-record material can lead to better quality content and open up opportunities for a wider range of programme genres, such as drama and documentaries. Pre-recording has a number of practical benefits too. The time of production is not constrained by the broadcast schedule, and content for multiple programmes can also be recorded in one session.

Recorded audio is refined through editing. Audio editing is the process of selecting, re-arranging, correcting and assembling audio content into a finished product (Hausman et al. 2012, p. 112). According to McLeish and Link (2015, p. 44) and Hausman et al. (2012, p. 116), the three primary reasons for editing are to:

Re-arrange recorded material into a more logical sequence.

Remove uninteresting, unwanted, repetitive or technically unacceptable sound.

Reduce the running time.

Underlying these practical aims of audio editing is an important creative process. Hausman et al. (2012, p. 116) state that editing is “somewhat like an art form”, and McLeish and Link (2015, p. 44) suggest that editing can be used as a “creative effect to produce juxtapositions of speech, music, sound and silence”.

2.1.1 Digital audio workstations

For more than fifty years, audio was recorded on magnetic tape. Combining sound sources required the use of a large mixing console which was used to control the sound with faders, knobs and buttons that had to be triggered at the right time. Editing was performed by cutting the magnetic tape with a razor blade and sticking it back together again (Barbour 2004).

The development of fast processors and high quality audio interfaces has since allowed audio to be stored and manipulated digitally using computer software. The primary tool for editing digital audio is the digital audio workstation, or DAW. A DAW is software that provides recording, mixing and editing capabilities for digital audio. DAWs were first introduced in the 1980s (Ingebretsen and Stockham 1982), and have since evolved into powerful tools that are accessible to anybody with a computer. Examples of popular commercial DAWs include Pro Tools by Avid, Logic Pro by Apple and Cubase by Steinberg (Ask Audio 2015; Producer Spot 2015).

DAWs provide a feature-rich toolset for manipulating audio signals. They can be used to navigate and edit audio with very fine control over timing, even down to individual samples. Automation means that changes made to the audio are remembered and repeated each time the audio is played. Automatic cross-fading between clips can be used to create inaudible edits.

The introduction of DAWs has transformed radio broadcasting by allowing fast random access, high storage densities, improved portability, and greater cost-effectiveness than analogue systems (Pizzi 1989). The powerful features of a DAW can replace most of the activities that would traditionally have to be performed using a radio studio. The accessibility of digital audio production has allowed audio editing to be performed by producers without requiring specialist knowledge of sound engineering (Peus 2011). McLeish and Link (2015, p. 44) suggested that the improved usability of DAWs has created a “high level of personal job satisfaction” (McLeish and Link 2015, p. 44). However, the deskilling of audio editing has also caused a reduction in the number of people required to produce radio programmes (Dunaway 2000).

As the audio is being stored and manipulated digitally, DAWs can be used to edit audio without any loss in sound quality. However, when the edited audio is saved, there are two approaches that can be taken — destructive and non-destructive (McLeish and Link 2015, p. 45). Destructive editing occurs when a change is made that alters the structure of the sound file. This prevents the edits from being easily undone. Non-destructive editing occurs when the original audio components are retained and can be re-used to make a change to the edit. DAWs can perform non-destructive editing by saving an edit decision list, or EDL, which records the positions of the edits, but does change any audio files. With EDLs, audio edits can be moved or undone retrospectively. Only when the final edit is ready does the audio get destructively “rendered” or “bounced” to an audio file.

2.1.2 Visual representation

Digital audio editing is performed using a visual representation on a computer screen (Derry 2003; Hausman et al. 2012). Barbour (2004) found through observation and interviews with radio producers that “visualization of audio on a screen has become the dominant focus in a radio production studio”, and that visual representations are used to assemble, modify and balance the audio for radio programmes.

Using visual means to interact with audio has a number of benefits. It allows users to manipulate the audio using a mouse and screen, which are commonly used in computing. Mapping audio to an image allows the temporal information of the sound to be displayed spatially, which means it can be searched and skimmed quickly and randomly. However, visualizing audio is difficult, and there are limitations to what audio visualizations can tell us about the audio.

Bouamrane and Luz (2007) argue that “visually representing audio in a meaningful manner is a particularly difficult task as there is no obvious or intuitive way of doing so”. Currently visual representations cannot fully represent the sound, so producers must listen to comprehend the audio. McLeish and Link (2015, p. 45) argue that “while it is tempting to edit visually using the waveform on the screen, it is essential to listen carefully to the sound, [such as to] distinguish between an end-of-sentence breath and a mid-sentence breath”. Visual representations may also serve as a distraction to the producer. Barbour (2004) found that to concentrate on listening, radio producers disengaged their visual senses by shutting or de-focusing their eyes, or looking away.

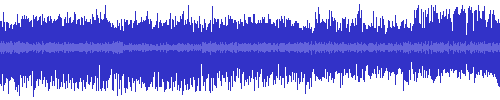

Although we could not find any studies that surveyed the use of visualizations in DAWs, we looked at the five most popular DAWs (Ask Audio 2015) and found that all of them visualized the audio using a “waveform”.

2.1.2.1 Waveforms

An audio waveform is a common graphical representation of an audio signal that is produced by plotting the amplitude of an audio signal over time. Audio signals are periodic, as sound is produced through compression and rarefaction. This can be seen from the repeating curved lines of the waveform. Lines that are closer together represent higher pitch sounds and lines that are farther apart represent a lower pitch. The height of a waveform corresponds to the amplitude, or “volume”, of the audio.

Waveforms have been used to visually represent audio content since the first digital audio workstations started to appear (Ingebretsen and Stockham 1982). Today, they are the default audio visualization used in the DAWs we surveyed. The simplicity of the waveform makes it conceptually easy for users to understand and interpret the audio. Waveforms are relatively compact, so can be arranged vertically on top of each other to view multiple audio tracks simultaneously. They are also computationally efficient to generate, as they are plotted in the time domain.

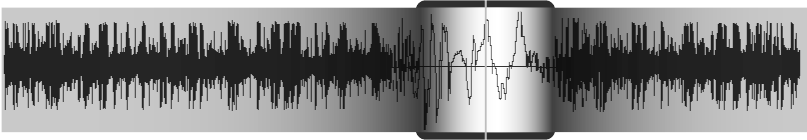

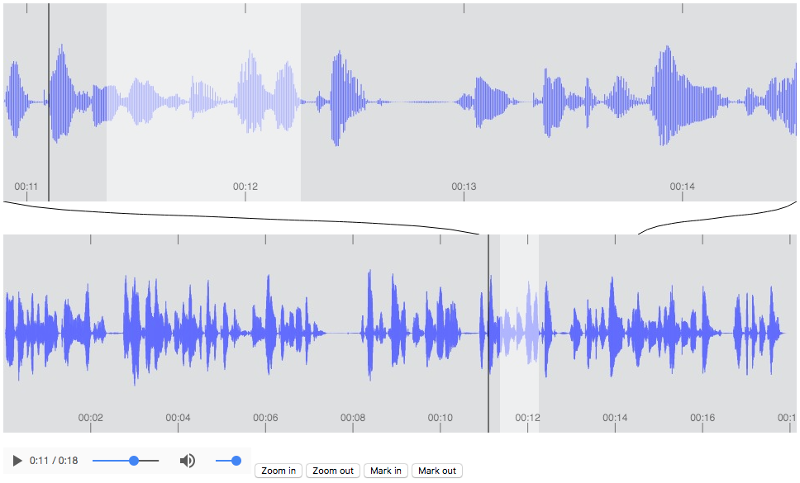

Figure 2.1.2.1.1: Example audio waveforms of speech, demonstrating the effect of zoom on the visibility of frequency information.

Despite their widespread use, waveforms display relatively little information about the audio. Figure 2.1.2.1.1 (a) shows a waveform that has been zoomed-in. At this scale, we can see the individual cycles of the audio signal, and the mix of frequencies that make up the sound. However, when we zoom out, these curves are compressed to the point where they are no longer visible. Figure 2.1.2.1.1 (b) shows a waveform at a zoom level typical in audio production. At this scale, it is impossible to determine which frequencies are present. What remains is an “amplitude envelope” that indicates the volume of the sound over time.

Without frequency content, there is a limit to the amount of information waveforms can convey. The amplitude envelope can be used to identify silences, peaks and the relative volume of different parts of the audio. With experience, it is possible to use the amplitude envelope to distinguish different types of sounds. For example, the frequent short periods of silence in Figure 2.1.2.1.1 (b) indicate that this may be speech, because unlike music, speech is broken up into words.

In order to be able to infer this information, users must learn what the amplitude envelope of different sounds look like. This would be a problem for novice producers, but not for professionals who work with audio on a daily basis. However, the level of information that can be inferred is limited (Hausman et al. 2012, p. 114). For example, it is very difficult to use a waveform to distinguish editorially relevant features, such as individual people’s voices, or different styles of music.

We are interested in learning how audio waveforms affect the performance of audio editing tasks. However, despite the widespread use of waveforms to visualize audio, we could not find any studies that have attempted to evaluate their performance as a method of interacting with audio.

2.1.2.2 Spectrograms

A spectrogram is a plot of the intensity of the Short-Time Fourier Transform (Smith 2007), which visually represents of the spectrum of frequencies in an audio signal over time. Higher frequencies are displayed at the top of a spectrogram, and the intensity of the signal is mapped to the brightness (or sometimes colour) of the image. Figure 2.1.2.2.1 shows an example spectrogram of a speech recording.

Spectrograms clearly display the frequencies that make up the sound, and in what proportions. With spectrograms, time and frequency can be scaled independently. Unlike waveforms, when a spectrogram is viewed at different zoom levels, the frequency information is still visible. Spectrograms are based on frequency analysis, so they are more computationally expensive to generate than waveforms, but this is rarely an issue with modern processors.

Like waveforms, spectrograms are general-purpose, so can be used for a variety of tasks and applications. Spectrograms display a much higher density of information than waveforms, which can be used to infer more information. For example, Zue and Cole (1979; Zue and Lamel 1986) found that expert users were able to use spectrograms to read individual phonemes of speech, but inexperienced users were unable to achieve this. Although spectrograms present the data clearly, users must still learn how to read the information.

Reading spectrograms requires users to have a theoretical understand of audio frequencies and how they behave, such as how a single pitch can be composed of many harmonics. Although spectrograms display the intensity of the signal in each frequency band, it is not apparent what the overall volume of the audio is at a given time. Additionally, spectrograms have a wide range of parameters that control how they are displayed, including FFT window size and shape, linear/non-linear frequency and intensity scaling, min/max values and colour mapping. This creates inconsistencies between different spectrograms, which can make it difficult for users to move between software. Waveforms don’t have as many parameters, so are much more consistent.

In Section 2.3, we will show how waveforms and spectrograms can be enhanced using semantic audio features, but first we will introduce the relevant methods and applications of semantic audio analysis.

2.2 Semantic audio analysis

Semantic audio analysis is the extraction of descriptive and perceptual attributes from an audio signal, which can be used to describe sound in human-readable terms. Semantic audio can make sound recordings less “opaque” by allowing users to understand what is contained in the audio without having to listen to it first. This approach can be applied to the improvement of audio production interfaces. For example, Fazekas and Sandler (2007) enhanced a DAW to assist music producers in navigating and editing their content by automatically segmenting music into verses and choruses. We are interested in how semantic audio analysis can be applied to user interfaces for the purpose of assisting the production of radio.

In this section, we will provide an overview of methods and applications of semantic audio analysis. Semantic audio brings together a wide variety of disciplines, including speech recognition, information retrieval, audio analysis, signal processing, psychoacoustics, and machine learning (Foote 1999). As such, we will only aim to provide a brief overview of selected methods and applications that are relevant to the technology used and the systems developed in this thesis. As the focus of our research is on the pre-production of speech programmes, we will only cover methods and applications related to speech content, which notably excludes the active field of music information retrieval (Downie 2008).

2.2.1 Semantic audio features

Semantic audio analysis is conducted by processing the audio using an algorithm to extract one or more semantically relevant “features”. This process known as feature extraction. Audio features are numerical representations of certain properties of the audio, which are often categorised into low-level and high-level features (Fazekas 2012, p. 31). Low-level features include physical and perceptual properties, such as the energy and spectral content of the sound. High-level audio features correspond to more meaningful concepts, such as words and people, or structural segments, such as programmes or topics. Many semantic audio algorithms use classification or machine learning to map low-level features into high-level features. For example, in speech recognition, a language model is used to map individual phonemes of speech into words and sentences (Junqua and Haton 1995).

There are many different types of audio features that can be extracted. With music, rhythmic features are used to extract the beats and tempo, and harmonic features are used to determine the notes and chords. Speech is in some ways a more complex signal to analyse, so more generic features are often used. In this section, we will describe selected audio features that are touched upon later in this thesis, to help illuminate the reader’s understanding of their origin. Below we have outlined three types: energy, temporal and spectral features.

2.2.1.1 Energy features

Energy features are based on the energy of the audio signal, and how it changes over time. Similarly to audio waveforms, energy features can be used to infer certain properties of the sound, such as whether it is likely to be music or speech. Calculating energy features is often computationally efficient, which makes them attractive for use in real-time applications, or on large data sets.

A simple and popular low-level energy feature is root mean square (RMS), which is calculated as the square root of the mean square of the audio signal (see Equation 2.2.1.1.1). RMS is commonly used in scientific work as a measurement of a signal’s power. The statistics of an audio signal’s RMS value can be used as an effective classifier of music and speech, as demonstrated by Ericsson (2009) and Panagiotakis and Tziritas (2005).

\[\begin{aligned} x_{rms} &= \sqrt{\frac{1}{N} \displaystyle\sum\limits_{i=0}^{N} x_i^2} \end{aligned}\qquad(2.2.1.1.1)\]

where \(x_i\) are the audio samples and \(N\) is the frame size.

RMS is also used as the basis for other features. Low energy ratio (also known as “silent interval frequency”, “silence ratio” or “energy contour dip”) is a measure of the number of RMS values in a moving window that fall below a threshold (Liang et al. 2005). It is used for speech/music discrimination (SMD), and works by exploiting the fact that speech has frequent silent gaps between words, whereas music does not. The threshold can be set as a fixed value (Liang et al. 2005), a function of a moving average (Ericsson 2009) or moving peak value (Saunders 1996).

2.2.1.2 Temporal features

Temporal features are based on statistics of the audio samples. These statistics are calculated in the time domain, so like energy features, temporal features are computationally efficient. A popular temporal feature is zero-crossing rate (ZCR), which is the rate at which a signal crosses the time axis (Zhang and Kuo 2001, 606:37). ZCR can be used as a crude measure of pitch, or distribution of spectral energy.

Early work in SMD (Saunders 1996) identified that “speech signals produce a marked rise in the ZCR during periods of fricativity occurring at the beginning and end of words”, whereas music does not. This causes a bimodality in the distribution of the ZCR, which can be detected by measuring its “skewness”. Panagiotakis and Tziritas (2005) also found that “RMS and ZCR are somewhat correlated for speech signals, while essentially independent for music”, and so the product of RMS and ZCR can also be used as a SMD classifier.

2.2.1.3 Spectral features

Spectral features decompose the audio signal into individual frequency bands to analyse the frequencies that are present in the signal, and in what proportion. This is commonly performed using a fast Fourier transform (Smith 2007).

Spectral centroid (Smaragdis, Radhakrishnan, and Wilson 2009) is a measure of the “centre of mass” of the spectrum, calculated as the mean of the audio frequencies, weighted by the magnitude of each frequency bin (see Equation 2.2.1.3.1). Audio that has more higher frequencies than lower frequencies has a higher spectral centroid value, and vice-versa. Spectral centroid is a good predictor of the perceived “brightness” of the audio, which can be used to distinguish sounds of different timbre (Schubert, Wolfe, and Tarnopolsky 2004).

\[\begin{aligned} s_{centroid} &= \frac{ \sum_{n=0}^{N-1} f(n) x(n) } { \sum_{n=0}^{N-1} x(n) } \end{aligned}\qquad(2.2.1.3.1)\]

where \(x(n)\) is the magnitude and \(f(n)\) is the centre frequency of bin \(n\).

The cepstrum of a signal is the power spectrum of the log of its power spectrum (Noll 1967). The cepstrum is a compact representation of how the frequencies in a signal change over time. The Mel-frequency cepstrum is calculated by spacing the frequency bands using the Mel scale (Stevens and Volkmann 1937), which gives a better approximation to the human hearing system. The audio features produced through this process are called Mel-frequency Cepstral Coefficients, or MFCCs (Imai 1983). MFCCs are commonly used as a speech analysis tool, and have been successfully applied to SMD (Liang et al. 2005; Pikrakis, Giannakopoulos, and Theodoridis 2008; A. Pikrakis, Giannakopoulos, and Theodoridis 2006; Sell and Clark 2014; Wieser, Husinsky, and Seidl 2014) and speaker segmentation (Anguera Miro et al. 2012; Friedland et al. 2009), as well as many other problems.

Now that we have a general understanding of some common semantic audio features, we will see how they have been used for applications related to radio production.

2.2.2 Applications

Semantic audio analysis allows us to gain insights into the content of audio recordings without having to listen to them. The semantic audio features we described have already been used to tackle a variety of problems (Foote 1999). In this section, we outline the aim, methods and performance of three applications of semantic audio analysis that are used later in this thesis: speech/music discrimination, speaker diarization and automatic speech recognition.

2.2.2.1 Speech/music discrimination

Speech/music discrimination (SMD) is the task of segmenting and labelling audio content into sections of either music or speech. Many SMD systems have been specifically developed for use with radio broadcasts (Saunders 1996; Aggelos Pikrakis, Giannakopoulos, and Theodoridis 2006; Pikrakis, Giannakopoulos, and Theodoridis 2008; Ericsson 2009; Wieser, Husinsky, and Seidl 2014) and television broadcasts (Seyerlehner et al. 2007; Sell and Clark 2014). SMD systems have been successfully implemented using a variety of different features, including low energy ratio (Ericsson 2009), ZCR skewness (Saunders 1996), spectral entropy (Aggelos Pikrakis, Giannakopoulos, and Theodoridis 2006), continuous frequency activation (Seyerlehner et al. 2007; Wieser, Husinsky, and Seidl 2014), chromagrams (Sell and Clark 2014) and MFCCs (Pikrakis, Giannakopoulos, and Theodoridis 2008). Carey, Parris, and Lloyd-Thomas (1999) compares the performance of some common SMD audio features.

Most SMD systems report high accuracy figures of 96% and above, which shows that automatic SMD is likely to be useful in real-life applications. However, as Pikrakis, Giannakopoulos, and Theodoridis (2008) argues, each system is evaluated using different data sets that are inconsistent in content and length, which makes it difficult to compare them.

Wieser, Husinsky, and Seidl (2014) showed that by including a “human in the loop”, the accuracy of their SMD increased from 96.6% to 100%. They achieved this by adding a user-adjustable slider to their interface that controlled the detection threshold. When the user adjusted the slider, they could see the effect on the segmentation directly to help them find the correct setting.

2.2.2.2 Speaker diarization

Speaker diarization is the task of segmenting an audio recording into labelled segments that identify “who spoke when” (Anguera Miro et al. 2012). With this task, the location of any speech content and number of speakers is usually unknown. Speaker diarization has clear applications to the production of radio, where there are often multiple people speaking in a single recording, and it is desirable to know where they are speaking without having to listen.

Review papers from Tranter and Reynolds (2006) and Anguera Miro et al. (2012) show that the vast majority of speaker diarization systems are based on clustering of MFCCs, and that current research is focused on the improvement of clustering algorithms and pre-processing stages, rather than audio features. They also show that most of the recent research has focused on recordings of meetings, rather than broadcast content.

Anguera Miro et al. (2012) found that the average error rate for speaker diarization systems was 11.6% and 17.7% for two standard data sets (National Institute of Standards and Technology (NIST) 2016). However, these data sets are based on microphone recordings of meetings, rather than broadcast content. Bell et al. (2015) conducted an evaluation of speaker diarization systems on television recordings of multiple genres. These results showed that the error rate was 47.5%, which is considerably higher. However, rather than just trying to match speakers within individual recordings, their evaluation was conducted across multiple recordings, which made matching speakers between them all more difficult. A breakdown of the results showed that most of the errors were misidentification of speakers, and that misidentification of speech accounted for less than 8% of the error rate.

Speaker diarization systems assign a unique identity to each speaker, but they do not attempt to identify who the speaker is. Speaker recognition is the task of identifying a person based on the sound of their voice (Doddington 1985; Lee, Soong, and Paliwal 1999). Extracting metadata such as participant names and genders from radio content could be used to enable automated information searching and indexing (Kinnunen and Li 2010). Speaker recognition relies on access to a database of trained speaker models, which represent people’s voices. In radio, many of the contributors are from a small pool of presenters, so it may be feasible to use speaker recognition techniques to detect their voices with sufficient accuracy.

Raimond et al. (2014) introduced the BBC World Service Archive prototype, which was an interface that used automatic keyword tagging and crowd-sourcing to support the search and discovery of a large radio archive. The interface used speaker diarization and speaker recognition to help users navigate within individual radio programmes. Figure 2.2.2.2.1 shows an example of a radio programme that has been segmented into five named speakers.

2.2.2.3 Automatic speech recognition

Automatic speech recognition (ASR) can be used to automatically convert speech to text. The ability to convert audio signals to text opens up many possibilities in radio production, such as being able to navigate audio recordings through searching and skimming. These opportunities are discussed in greater detail in Section 2.4.

Modern ASR systems can be broken down into two main stages (Junqua and Haton 1995). The first stage uses an acoustic model to map the audio to a set of phonemes, which are the individual noises that make up the speech. In the second stage, a language model converts the sequence of phonemes into words and sentences. Both the acoustic and language models are developed using machine learning techniques to train the system based on recordings and transcripts of speech. As such, the success of an ASR system depends on the quality and fitness of the data that it is trained on.

Despite advances in the field (Lee, Soong, and Paliwal 1999), ASR produces erroneous transcripts. Bell et al. (2015) conducted an evaluation of ASR systems on television programmes of various genres. Each system was judged by the proportion of incorrect words, known as the “word error rate” (WER). The mean average WER of the systems tested was 23.7%, however the variance across programme genres was high, with the WER varying from 10 – 41% across the 16 genres tested.

Figure 2.2.2.3.1 shows an example of a transcript generated by an ASR system with a WER of approximately 16%. ASR transcripts don’t include letter capitalisation or punctuation, but this can be estimated and added using post-processing (Gravano, Jansche, and Bacchiani 2009).

[Speaker 1] the manchurian candidate both seems to play up these fears and to be in a way in she comes to sudden he can have a critique of the idea of the moral panic around brainwashing i wondered where pavlov fits into that story and how seriously are his ideas taken in the literature of the nineteen fifties around brainwashing [Speaker 2] we'll have a viz is everywhere in in the discussion of the american p.o.w.s they're sometimes referred to in magazine articles and in popular commentary at the time as as prisoners of pavlov so there was a larger of of of our popular discussion about pavlov often not very well informed but only rouge to his experiments with the conditioned reflex and his famous salivating dogs and ringing bow and so on that was was everywhere so certainly many americans would have at associated some kind of pavlovian conditioning with what had been done to the p.o.w.s but but it wasn't generally carried very far into in terms of actually trying to him better understand how pavlovian principles or psychology might might actually have been at work in the p.o.w. camps

2.3 Audio visualization

In the last section, we explored how semantic audio analysis can be used to extract information from audio, but did not discuss how such information is presented to the user. As we shall see in this section, semantic information can be used to support interaction with audio recordings by using it to enhance audio visualizations.

Audio visualization is the task of mapping an audio signal to an image. The human visual system is capable of viewing an entire image at once, and is adept at searching and skimming images (Wolfe and Horowitz 2004). On the other hand, sound must be consumed serially and over a period of time. Mapping sound to vision allows temporal information to be displayed spatially, which can overcome some of the limitations of a time-based medium like sound.

We saw in Section 2.1.1 that audio visualization is already used by DAWs to help users navigate and edit audio content. However, we also saw that current audio visualizations are limited in what they can display. For example, waveforms only display amplitude information, much of which cannot be seen at typical zoom levels. To effectively navigate audio waveforms, users must read the shape of the visualization.

In this section, we will see how previous research has proposed a number of enhancements to current audio visualizations that aim to improve their performance. We start by looking at the relationship between sound and vision, and considering the perceptual mappings between the two that already exist. We then review techniques that have previously been used to process or enhance waveforms and spectrograms to make it easier for users to navigate and edit audio recordings.

2.3.1 Crossmodality

To be able to represent audio visually, we must map auditory properties to visual properties. When attempting to link sound and vision, it is desirable to create a mapping that is coherent and makes sense to the user. By creating an audio visualisation that “looks likes it sounds”, it might be possible for users to comprehend the sound without having to listen to it.

Crossmodal perception is a term used to describe interaction between the different senses (Spence 2011). Previous work has shown that there are perceptual mappings between auditory and visual stimuli that are experienced by most of the population. These could be exploited to aid the navigation and editing of audio recordings.

The “bouba/kiki effect” is a demonstration of crossmodal mapping between speech sounds and the visual shape of objects, originally discovered in an experiment by Köhler (1929). Participants were shown two abstract shapes, shown in Figure 2.3.1.1, and asked which shape was called “bouba” and which was called “kiki”Köhler used the words “baluma” and “takete” in the original experiment, but the result was the same.

. Ramachandran and Hubbard (2001) found that 95–98% of the population gave the same answerThe vast majority of participants chose to name the curvy, rounded shape on the left “bouba”, and the sharp, pointy shape on the right “kiki”.

. This is an example of just one audio-visual mapping that is common amongst the population.

Spence (2011) presented a review of psychology experiments that attempted to find crossmodal links in the human brain, including audio-visual mapping. He found that there was strong evidence for five audio-visual mappings, shown in Table 2.3.1.1. These findings were supported by Tsiros (2014), who attempted to generate images to match different sounds, and measured their success through a user study. In addition to confirming the strong links between loudness/size and pitch/elevation, he found weaker links for pitch/colour, dissonance/granularity, and dissonance/colour complexity.

| Link | Direction |

|---|---|

| Loudness/brightness | louder=brighter |

| Pitch/elevation | higher=higher |

| Pitch/size | higher=smaller |

| Loudness/size | louder=bigger |

| Pitch/spatial frequency | higher=higher |

Current audio visualizations exploit some of these crossmodal mappings. For example, waveforms map loudness to size, and spectrograms map loudness to brightness, and pitch to elevation. However, this previous work shows that there are many more links between sound and vision that could be further exploited by audio visualizations.

2.3.3 Spectrograms

As we discussed in Section 2.1.2.2, spectrograms are an information-rich representation of the spectrum of frequencies in an audio signal over time, but they can be difficult for novice users to read. Lin et al. (2012) introduced a method of filtering spectrograms to visually emphasise non-speech events in long audio recordings. The filtering was done using an “image saliency algorithm” that detected differences in the intensity and orientation of the spectrogram. This saliency-maximised spectrogram was integrated into an audio navigation interface called Timeliner (Goudeseune 2012), which displayed the spectrogram alongside a waveform. Lin et al. (2013) describes an evaluation in which 12 novice participants used Timeliner to find sound effects hidden in meeting room recordings using both saliency-maximised and normal spectrograms. The results show that saliency-maximised spectrograms significantly outperformed normal spectrograms. Filtering spectrograms shows promise as a way of detecting unusual events, however it is unclear how useful this sort of application would be in the context of radio production.

2.4 Semantic speech interfaces

Speech recordings can be converted to text in a process known as “transcription”. Transcripts can be used to record exactly what somebody said, and the transcript text can be read, copied, shared, skimmed and searched using a variety of tools, such as word processors, or on paper. Hausman et al. (2012, p. 133) notes that radio producers currently “cut, paste and copy sound files much the same way we use a word processor to manipulate words, sentences and paragraphs”. In this section, we will see how transcripts can be used as an interface to aid the navigation and editing of speech recordings.

2.4.1 Transcript generation

Transcripts can be written manually, either using pen and paper or a word processor, but this is a slow and tedious process. Transcription can be completed faster by only transcribing the most salient words, but this makes the transcript much less readable, particularly to others who haven’t heard the original recording. Alternatively, a third-party can be used to transcribe the speech, but this slow and expensive. For example, transcribing speech using rev.com currently costs US$1 per minute and takes 12 hourshttps://www.rev.com/transcription, accessed 11/12/2017

.

As we saw in Section 2.2.2.3, ASR can be used to convert speech to text automatically. ASR is quicker and cheaper than manual transcription. ASR also produces accurate timestamps for each word, which can be used to precisely navigate and edit the audio, but word-level timestamps can also be added to manually-written transcripts using speech alignment (Griggs 2007; Boháč and Blavka 2013).

Erroneous transcripts reduce listener comprehension (Stark, Whittaker, and Hirschberg 2000; Vemuri et al. 2004) and increase the time it takes to search audio content (Ranjan, Balakrishnan, and Chignell 2006) and correct errors (Burke, Amento, and Isenhour 2006). However, despite the errors in ASR transcripts, they provide a highly effective tool for browsing audio content as users can visually scan the text to focus on regions of interest, known as “strategic fixation” (Whittaker and Hirschberg 2007).

2.4.3 Semantic speech editing

In addition to supporting the navigation of speech recordings, transcripts have also been used as a method of editing speech content, known as semantic speech editing. The first of these was the “Large Interactive Display System Wave Speech Editor”, catchily shortened as LIDSWSEdit, from Apperley et al. (2002), which used ASR transcripts to allow users to navigate and edit lecture recordings. Any edits made to the transcript were correspondingly applied to the underlying audio recording. Users could re-arrange sentences and words by selecting the text, and using a drag-and-drop action. Alternatively, speech could be removed by selecting text then clicking a button to either delete the selected text, or everything except the selected text. LIDSWSEdit was further developed into the “TRanscription-based Audio EDitor”, or TRAED (Masoodian et al. 2006). TRAED used the same editing actions as LIDSWSEdit, but rather than displaying the text and audio waveform separately, it displayed the waveform in-line with the text. Individual words were delineated by drawing boxes around the waveform/word pair. The boundary between each pair could be adjusted by dragging the boundary edge. The author could not find any user studies of LIDSWSEdit or TRAED.

Whittaker and Amento (2004) created an interface for editing voicemail messages using ASR transcripts. Users could cut-and-paste parts that they wanted, or delete parts they didn’t. They evaluated their system in a formal study of 16 voicemail users, which found that semantic editing was faster and as accurate as editing with a waveform. Crucially, they found that this was true even though the transcripts had an average word error rate of 28%. This suggests that semantic editing is beneficial even when using erroneous transcripts.

Rubin et al. (2013; Rubin 2015) presented a novel interface for creating “audio stories” that combine speech and music, which is similar to radio production. The interface, shown in Figure 2.4.3.1, used an editable transcript with two columns, one for each of a pair of speakers. It allowed the user to cut, copy, paste and delete the audio using the text, and highlighted repeated words and similar sentences. The transcripts were generated using an online service that produced 100% accurate, or “verbatim”, transcripts. As they were manually-generated, the transcripts also included “umm”s, breaths and pauses, which were displayed and labelled in the interface. However, the manual transcripts did not include timestamps, so speech alignment software was used to recover the timestamps for each word. The system also included additional functionality for finding and adding music tracks, and for varying the length of music using automatic looping. The system was evaluated through a short informal study of four participants where the editing capabilities received positive feedback. The author could not find any follow-up studies.

Sivaraman, Yoon, and Mitros (2016) created a semantic editing system for asynchronous voice-based discussions, where users could quickly edit their speech recording before sending it to the recipient. Their system used near-live ASR and detected pauses in the speech. Their interface allowed users to delete selected words/pauses, insert additional pauses and fix incorrect words. In a formal qualitative study of their system with nine users, they found that text-based editing was considered good enough to replace waveform editing, and to be more accessible. They observed that most users only used the system to make fine-grained edits, instead of editing large chunks. Users said that the transcript also allowed them to quickly review all the points that were made, and that the errors in the transcript weren’t a heavy distraction.

Yoon et al. (2014) created a collaborative tablet-based document annotation system called RichReview, which offered users three modalities in which to annotate documents — free-form inking, voice recording and deictic gestures (i.e. pointing to areas of interest). The voice recordings were displayed using a waveform, overlaid with an ASR transcript of the speech. Users could trim or tidy the voice recordings by drawing a line through words or pauses to remove them. The system was evaluated using a qualitative study of 12 students which found that the editing features were considered easy to use and efficient for removing “umm”s and long pauses. However many participants reported that the transcripts were not accurate enough to use without having to listen to the audio. Yoon et al. (2016) describes two deployment studies that used a similar system called RichReview++, but they did not report there being any semantic editing functionality.

2.4.4 Video editing

Semantic speech editing has also been used to support video editing. SILVER (Casares et al. 2002; Long et al. 2003) was a video editor that aligned words from subtitles to the video, and displayed them in a transcript window. Gaps, errors and edits were displayed in the transcript using special characters, such as “||’‘for clip boundaries, “—’’ for gaps, and”*’’ for noise or recognition errors. The video could be edited by deleting text in the transcript. SILVER was evaluated in an informal study with seven students, but the study did not report any results about the transcript-based editing feature.

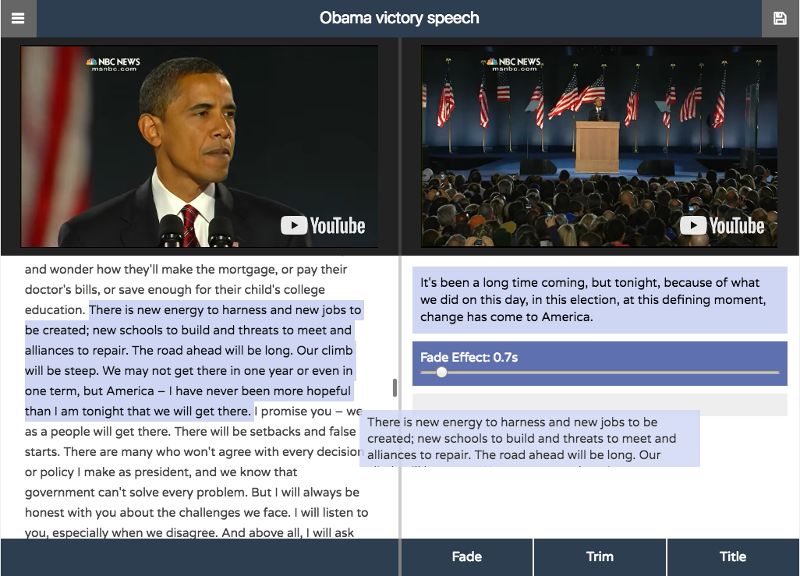

Hyperaudio Pad is an open-source audio and video editor, first proposed by Boas (2011), and now available online as a free service (Hyperaudio Inc. 2016). This web-based interface, shown in Figure 2.4.4.1, allows users to navigate and edit online media using transcripts, which are generated from subtitles. Editing is performed by selecting a part of the transcript and dragging it into a window on the right to create a “clip”. Clips can be re-ordered, split using a “trim” tool, and fade effects can be added between clips. Clips from different recordings can be mixed together, and the final edited version can be played and shared with others. No user studies of this system could be found.

When editing a video interview, it is desirable to avoid making a cut while the person speaking is in shot, because it causes the image to jump unnaturally. Berthouzoz, Li, and Agrawala (2012) used image processing algorithms to create a video editor that can help the user hide these edit points. The system had an editable transcript window that displayed suitable edit points and allowed the user to edit the video by selecting and deleting text. The transcripts were generated manually using an online crowd-sourcing service, and word timings were added using speech alignment software. The system also allowed users to easily remove “umm”s or repeated words as they were explicitly marked in the manual transcript. No user study was reported, however the system received positive feedback from nine professionals who were given a demonstration.

2.4.5 Pre-written scripts

The systems so far have only considered transcripts that have been generated from the speech itself. Sometimes speech is recorded based on a pre-written script, or from notes. Avid Technology released a feature for their Media Composer video editing software in 2007 called ScriptSync (Avid Technology Inc. 2011). This feature aligns a user-supplied transcript to a video recording by placing a marker in the video for each line of the transcript (Griggs 2007). This allows users to jump to a particular line, or see which line in the transcript corresponds to the current point in the video. A second version of ScriptSync was launched in February 2017 (Avid Technology Inc. 2017) which added script correction and collaborative note-taking.

Shin, Li, and Durand (2016) created a system called Voice Script that supports an integrated workflow for writing scripts, and recording/editing audio. An informal study with four amateur participants found that it could support various workflows including multiple iterations. It included a “master script” layout to bring together different recordings, which was found to work well. A second study of four amateur participants directly compared the system to that of Rubin et al. (2013), which found that participants were able to complete an audio production task 25% faster using the Voice Script system. This study demonstrates that for workflows that involve pre-written scripts, there is potential to improve the audio editing by using an integrated writing and editing system.

QuickCut from Truong et al. (2016) was an interface designed to help producers edit a narrated video from a pre-written script, voiceover audio and raw video footage. Producers could label their video footage using their voice, which was manually transcribed using a crowd-sourced online service in combination with speech alignment. Selecting text in the script also selected the corresponding segment in the voiceover audio, and displayed video clips labelled with similar words. After selecting an appropriate clip, it could be associated with a position in the script by using drag-and-drop to add it to the timeline. The completed timeline could then be exported as an EDL for use in professional video editing software. QuickCut was evaluated by the researchers themselves and one professional filmmaker, who were able to use the system to produce a minute of video in 8–31 minutes, rather than the 2–5 hours professional filmmakers suggest they require. Voice-based logging makes sense for logging video footage as it is easy to watch and talk at the same time. However, for speech content it would be difficult to talk and listen simultaneously. The ability to export edits to professional software allows for a smooth continuation of the production workflow.

2.4.6 Transcript correction

Whittaker and Amento (2004) found that users of their semantic speech editing system “wanted to be able to correct errors they found in the transcript”. ASR errors reduce listener comprehension (Stark, Whittaker, and Hirschberg 2000; Vemuri et al. 2004) and increase the time it takes to search audio content (Ranjan, Balakrishnan, and Chignell 2006) and correct errors (Burke, Amento, and Isenhour 2006).

Four of the ASR transcript interfaces mentioned above included correction functionality, with each using a different method to edit the text. SILVER (Casares et al. 2002) required the user to both type the replacement word and select the start and end time of the word in the video timeline. TRAED (Masoodian et al. 2006) allowed users to correct a word by selecting it and typing the replacement. Typing a space created a new word by dividing the time of the original word in half. Sivaraman, Yoon, and Mitros (2016) initially planned to have two editing modes — one for audio editing and the other for text editing. However, in pilot testing they found that having two modes confused users, so they developed a pop-up box that indicated to the user when they are editing the text, rather than the audio. SCANMail did not initially include transcript correction, but this was later added and evaluated by Burke, Amento, and Isenhour (2006). These changes allowed users to either replace an individual word by selecting a replacement from a drop-down menu, or replace multiple words by selecting them and typing the replacement. A user study of 16 participants who corrected voicemail messages found that compared to typing, selecting the replacement word required the user to listen to less of the audio.

The correction process can be made more efficient by correlating the user’s input with contextual information (Suhm, Myers, and Waibel 2001). In particular, the words immediately before and after the incorrect word can be used to reduce the number of candidate words, or even estimate the replacement. Liang, Iwano, and Shinoda (2014) showed that once an incorrect word is identified, in 30% of cases the correct word can automatically be inferred using \(n\)-grams and acoustic features.

Correction is normally a process that happens after ASR transcription, but as Wald et al. (2007) demonstrated, it is possible to correct ASR transcripts in real-time as the audio is captured. However, during recordings, radio producers are normally pre-occupied with operating the equipment, asking questions or listening to the answers. As this does not leave enough space for performing real-time correction, an extra producer would be required, which is costly.

The above systems used a keyboard and mouse interface to correct transcripts. Suhm, Myers, and Waibel (2001) tested alternatives methods that used vocal correction and a pen interface. They found that for skilled typists, keyboard and mouse input was faster than the alternatives, but that voice and pen input would be attractive for use by poor typists, or for devices that don’t allow fast keyboard input.

ASR transcripts contain errors in the text, but sometimes there are errors in the speech itself that producers may want to correct. TypeTalker from Arawjo, Yoon, and Guimbretière (2017) was an interface for editing synthesised speech using ASR transcripts. The speech was not synthesised from text, but from a recording of the user speaking. This was done to reduce the self-consciousness that results from hearing one’s own voice. As well as being able to remove unwanted speech, the use of speech synthesis meant that new speech could be synthesised and words could be changed. Adobe Systems Inc. (2016) demonstrated an unreleased prototype system called VoCo that enabled users to change a word in a speech recording, whilst retaining the natural characteristics of the original speaker’s voice. Such technology could be used to create seamless repairs to errors in speech recordings. However, the use of such technology has ethical and legal implications, particularly in a broadcasting context (Bendel 2017)There’s an interesting episode of Radiolab that discusses this: http://www.radiolab.org/story/breaking-news/

.

2.4.7 Confidence shading

In addition to producing a transcript, many ASR systems return a confidence score for each transcribed word, indicating how sure the system is that the word is correct. Confidence shading is a technique for displaying this score by colouring words with a low confidence score in a lighter shade (Suhm, Myers, and Waibel 2001). Confidence shading has been used to try to make mistakes easier to locate, and transcripts easier to read. However, confidence scores may themselves be incorrect by indicating that a correct word is probably incorrect, or that an incorrect word is probably correct. The trade-off between these two types of errors is controlled using the threshold value (Feng and Sears 2004).

Suhm, Myers, and Waibel (2001) conducted a user study of 15 participants who corrected an ASR transcript with and without confidence shading (in this case, highlighting). The results showed that correction with confidence shading took slightly longer than without, although this was not statistically significant. Conversely, Burke, Amento, and Isenhour (2006) reported that in their user study of 16 participants, most agreed that confidence shading was helpful for identifying mistakes in the transcripts. One notable difference between these studies is that Suhm, Myers, and Waibel (2001) optimised their confidence threshold to minimise the overall accuracy of the confidence shading, whilst Burke, Amento, and Isenhour (2006) increased the threshold to treat false negatives more seriously.

Vemuri et al. (2004) studied whether confidence shading improved comprehension of the transcript. They conducted a user study of 34 participants and measured the comprehension of short audio clips when using ASR transcripts with and without confidence shading. Although the results indicated better comprehension with confidence shading, there was no statistically significant difference.

2.5 Audio playback interfaces

The previous work we have considered so far has used audio visualization and transcripts to represent audio content. Visual presentation of audio content makes it easier for users to search and skim the information, but it is difficult, if not impossible, for humans to fully comprehend sound using visual means. Listening is the natural way for humans to consume audio content, but the time required to listen can make it a lengthy and inefficient process.